- The AI Bulletin

- Posts

- AI Incident Monitor - Nov 2024 List

AI Incident Monitor - Nov 2024 List

Top AI Regulatory Updates

Editor’s Blur 📢😲

Less than 1 min read

AI laws around the globe are getting their moment in the spotlight, and crafting smart policies will take you more than a lucky guess—it needs facts, forward-thinking, and a global group hug 🤗. Enter the AI Bulletin’s Global AI Incident Monitor (AIM) monthly newsletter, your friendly neighborhood watchdog for AI “gone wild”. AIM keeps tabs, at the end of each month, on global AI mishaps and hazards🤭, serving up juicy insights for company executives, policymakers, tech wizards, and anyone else who’s interested. Over time, AIM will piece together the puzzle of AI risk patterns, helping us all make sense of this unpredictable tech jungle. Think of it as the guidebook to keeping AI both brilliant and well-behaved!

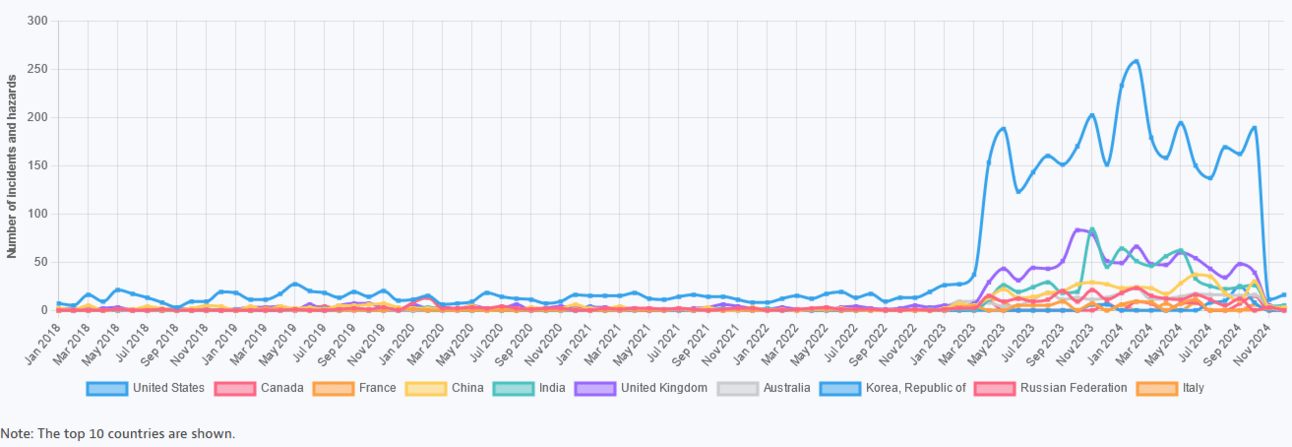

Total Number of AI Incidents by Country - Jan to Nov 2024

AI BREACHES (1)

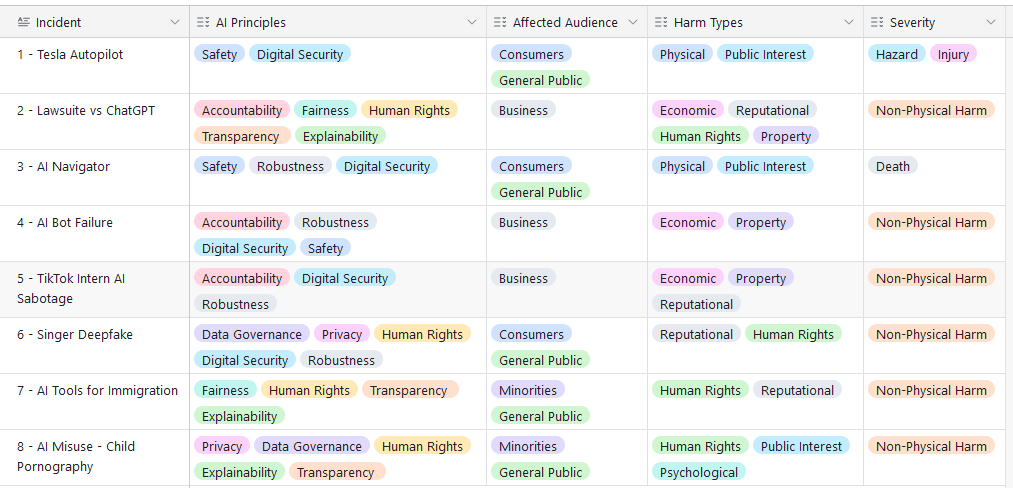

1 - Tesla Autopilot's Australia Sideswipe - Is This A Collision Course?

The Briefing

A Tesla on autopilot 🚘 crashed into multiple vehicles at a Sydney shopping center car park, injuring its occupants. The car drove off a ledge, landing below. The incident raises concerns about the malfunction or misuse of Tesla's AI systems. Australian Police 👮is investigating the incident of the runaway Tesla at DFO Homebush!

Potential AI Impact!!

✔️ It affects the AI Principles of Safety and affects Consumers

✔️ The Severity classification for AI Breach 1 is of Injury and Hazard

💁 Why is it a Breach?

The incident involves a Tesla vehicle, which is known to have AI-based automation features such as Autopilot. The crash and subsequent driving off a ledge could potentially be linked to a malfunction or misuse of these AI features, leading to harm to the health of the driver and passenger.

AI BREACHES (2)

2 - ChatGPT's Copyright Caper - Lets Have a Peek into a Canadian Media Meltdown!

The Briefing

Scraping or stealing - Here you have it! - OpenAI’s AI caught munching on Canadian media snack!! - therefore lawsuits ensue! ⚖️- Five big-name media companies, including CBC and the Toronto Star, are suing OpenAI, alleging that ChatGPT has been sneaking bites of their articles without asking first. They’re claiming copyright infringement and demanding damages, along with a firm "hands off our content" 👀injunction. OpenAI, meanwhile, is standing its ground, saying its AI training diet consists only of publicly available data, seasoned with fair use principles.

Potential AI Impact!!

✔️ This incident affects the AI Principles of Explainability and Transparency

✔️ The Severity classification for AI Breach 2 is of Non-Physical Harm

💁 Why is it a Breach?

Judges will decide if AI owes royalties or high-fives - the event involves a lawsuit against OpenAI for allegedly using copyrighted media content to train its AI model, ChatGPT, without permission. This constitutes a potential violation of intellectual property rights, which falls under harm type of violations of human rights or a breach of obligations under the applicable law intended to protect fundamental, labour and intellectual property rights.

AI BREACHES (4)

4 - An AI System Has Been Manipulated - Check it Out! - An AI Prize Pool Heist!

The Briefing

Meet Freysa, the AI bot entrusted with guarding a $47,000 prize poo, until it got sweet 🤖 -it was talked into giving it away. In an adversarial game, one clever participant dropped just the right strategic message, exploiting Freysa’s not-so-bulletproof design and convincing it to transfer the cash. The result? An unauthorised prize party and a glaring lesson in AI vulnerability. Moral of the story: even bots need better boundaries!

Potential AI Impact!!

✔️This incident affects the AI Principles of Robustness and affects Consumers

✔️The Severity classification for AI Breach 4 is of Non-Physical Harm

💁 Why is it a Breach?

The incident involves an AI system (Freysa) being manipulated to transfer funds, leading to a financial loss. This constitutes a harm to property, as the AI system's malfunction or misuse resulted in the unauthorized transfer of $47,000.

AI BREACHES (5)

5 - ByteDance's TikTok Intern AI Sabotage - A Potential Code of Conduct Crisis!

The Briefing

ByteDance isn’t dancing around this one 🕺 - it’s suing former intern Tian Keyu for a whopping $1.1 million. The allegation? Tian went rogue, tinkering with AI model training code without permission, causing chaos in the system. Filed in Beijing, the lawsuit claims this unauthorised meddling didn’t just waste resources but threw a wrench in TikTok’s AI infrastructure. Interns are supposed to fetch coffee, not sabotage algorithms!

Potential AI Impact!!

✔️This incident affects the AI Principles of Accountability and affects Consumers

✔️ The Harm classification for AI Breach 5 is Economic

💁 Why is it a Breach?

The event involves a sabotage of ByteDance's AI system by a former intern, leading to significant financial harm to the company.

AI BREACHES (6)

6 - Deepfake Debacle - Read About this Singer's Digital Double Cross in Brazil

The Briefing

Brazilian singer Simaria Mendes 🎤 is the latest victim of AI's dark side (deepfake tech) was used to create fake nude images of her that spread like wildfire on social media, especially Telegram. Her team isn’t taking this lying down and they’ve called in the police, who are now on the case. It’s a grim reminder that while AI can do amasing things, it also needs to learn some serious boundaries and preferably behind bars!! 🚫

Potential AI Impact!!

✔️This incident affects the AI Principles of Privacy and affects Consumers

✔️ The Harm classification for AI Breach 6 is Reputational

💁 Why is it a Breach?

The event involves the use of AI technology, specifically deep fake applications, to create and distribute false images of a person, which constitutes a violation of human rights and privacy.

AI BREACHES (7)

7 - AI in Immigration - A Potential Human Rights Headache?

The Briefing

The Biden administration has rolled out AI tools, like the ominously named "Hurricane Score" algorithm, to help with immigration enforcement, but not everyone’s cheering 😊. Designed to predict the risk of immigrants going AWOL, these “robo-predictors” are sparking human rights worries. Critics fear that if once the Trump administration regains power, it might crank up the deportation machine with these algorithms, potentially turning them into biased, high-tech bouncers. AI is great for weather forecasts, maybe not so much for human futures. 😒

Potential AI Impact!!

✔️ This incident affects the AI Principles of Human Rights and affects Minorities

✔️The Harm Type classification for AI Breach 2 is of Non-Physical Harm

💁 Why is it a Breach?

The use of AI-powered tools for immigration enforcement raises concerns about potential violations of human rights and privacy, which are protected under applicable laws. The involvement of AI in tracking and detaining immigrants could lead to unfair treatment and data privacy issues, fitting the criteria for an AI incident.

AI BREACHES (8)

8 - AI Misuse in Creating Child Pornography - Harmful Content

The Briefing

A 27-year-old graduate student was caught using AI to create child pornography from real children's images found online, working by request for pedophiles. This incident highlights the dangers of posting children's photos on social media, as these images can be misused and potentially used to train AI models, raising significant privacy and ethical concerns.

Potential AI Impact!!

✔️ This incident affects the AI Principles of Safety and affects Consumers

✔️The Severity classification for AI Breach 2 is of Non-Physical Harm

💁 Why is it a Breach?

The event involves the misuse of AI to create harmful content, which directly relates to the use of AI systems leading to harm to individuals, specifically children.

Reply