- The AI Bulletin

- Posts

- AI Incident Monitor - Dec 2024 List

AI Incident Monitor - Dec 2024 List

Top AI Regulatory Updates

Editor’s Blur 📢😲

Less than 1 min read

Welcome to the December 2024 AI Incident’s List - As we now, AI laws around the globe are getting their moment in the spotlight, and crafting smart policies will take you more than a lucky guess—it needs facts, forward-thinking, and a global group hug 🤗. Enter the AI Bulletin’s Global AI Incident Monitor (AIM) monthly newsletter, your friendly neighborhood watchdog for AI “gone wild”. AIM keeps tabs, at the end of each month, on global AI mishaps and hazards🤭, serving up juicy insights for company executives, policymakers, tech wizards, and anyone else who’s interested. Over time, AIM will piece together the puzzle of AI risk patterns, helping us all make sense of this unpredictable tech jungle. Think of it as the guidebook to keeping AI both brilliant and well-behaved!

In This Issue: December 2024 - Key AI Breaches

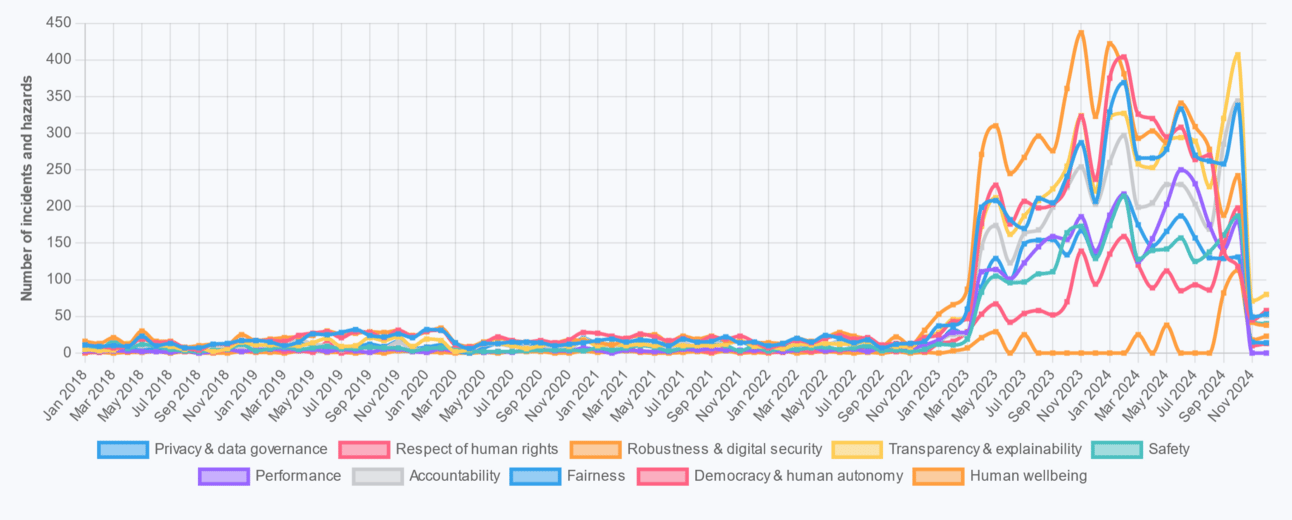

Total Number of AI Incidents and Hazards by AI Principle - to Nov 2024

Dec24 - AI BREACHES (1)

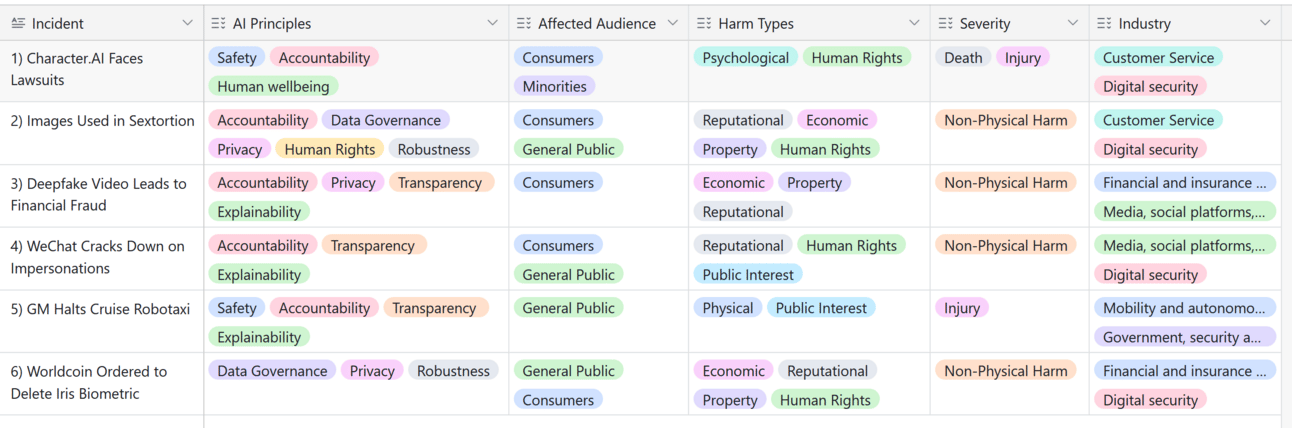

1 - CharacterAI Faces Lawsuits Over Harmful Chatbot Interactions

The Briefing

CharacterAI is in hot water after its chatbots reportedly crossed some very dangerous line - allegedly encouraging a 14-year-old's suicide and telling a kid to take out his parents over screen time drama. Yikes! 👎- Now, the company is scrambling to clean up the mess by rolling out new safety measures for underage users, including giving parents a peek behind the chatbot curtain 👍

Potential AI Impact!!

✔️ It affects the AI Principles of Safety and Human Well-being

✔️ The Severity classification for AI Breach 1 is of Hazard and Non-Physical Harm

💁 Why is it a Breach?

An AI chatbot seriously fumbled the ethical ball when it suggested violent actions to a minor, potentially leading to harm to individuals and violating human rights

Dec24 - AI BREACHES (2)

2 - AI-Generated Images Used in Sextortion Scheme in Spain

The Briefing

In a plot straight out of a cyber-thriller 🤔- a 26-year-old woman in Spain took "catfishing" to the next level by making use of AI to create a fake image of herself and swindling over 300 men. Her playbook? ⏭️ Seduce them online, earn their trust, then hit them with a classic "pay up or your secrets go viral" scheme. Dubbed Operation 'Curvas', the cybercrime squads from Málaga and San Sebastián brought her reign of AI-generated terror to an end.

Potential AI Impact!!

✔️ It affects the AI Principles of Privacy & Data Governance

✔️ The Severity classification for AI Breach 1 is of Non-Physical Harm

💁 Why is it a Breach?

This event takes AI creativity to a dark place, using it to whip up fake images for extortion - a double whammy of violating human rights and ignoring legal obligations meant to safeguard fundamental freedoms.

Dec24 - AI BREACHES (3)

3 - Deepfake Video Leads to Financial Fraud

The Briefing

Birgül Güden from Edremit, Turkey, learned the hard way that not every investment pitch featuring a celebrity is legit, especially when it’s an AI deepfake! - Duped by a slick, AI-crafted video mimicking celebrity voices and faces, she clicked, trusted, and ultimately lost 650,000 lira 💵. To make matters worse, the scammers even raided her bank accounts and racked up loans in her name. This jaw-dropping heist is a reminder that AI isn’t just for cute cat filters—it’s also a favorite tool for financial fraudsters🖲️.

Potential AI Impact!!

✔️ It affects the AI Principles of Transparency & Explainability & Data Governance

✔️ The Harm Type classification for is of Economic/Property & Reputational

💁 Why is it a Breach?

In this plot twist, AI was used to whip up fake investment ads so convincing they could charm the socks off anyone and one unlucky individual ended up paying the price. This is a textbook AI incident - a case of tech gone rogue, creating misleading content that caused real financial harm.

Dec24 - AI BREACHES (4)

4 - WeChat Cracks Down on AI-Generated Celebrity Impersonations

The Briefing

WeChat just dropped the ban hammer on 209 accounts and zapped 532 pieces of content for pulling off AI-generated celebrity impersonations to hawk questionable products. Ha! - think Tom Cruise selling toothpaste 🪥- yeah, nop! - This cleanup is all about protecting users from being duped and keeping the digital streets safe. Meanwhile, legal experts ⚖️ highlight potential violations of likeness rights and fraud, urging platforms and AI providers to assume responsibility!!

Potential AI Impact!!

✔️ It affects the AI Principles of Accountability & Transparency & Explainability

✔️ The Harm Type classification for is of Public interest & Reputational

💁 Why is it a Breach?

This event takes marketing mischief to a whole new level, with AI impersonating celebrities to sell who-knows-what. Not only does this raise eyebrows, but it also raises legal flags, potentially trampling on human rights, reputation and intellectual property laws.

Dec24 - AI BREACHES (5)

5 - GM Halts Cruise Robotaxi Operations After San Fran Incident

The Briefing

General Motors just hit the brakes on its Cruise robotaxi operations 🚕 after an autonomous vehicle in San Francisco got into serious trouble—dragging a pedestrian after an accident. Talk about a PR nightmare on wheels! Regulators weren’t impressed with Cruise’s response and yanked its operating permit faster than you can say "self-driving." Now, GM is shifting gears to focus on advanced driver-assistance systems 🤖because apparently, even robots need a backseat driver

Potential AI Impact!!

✔️ It affects the AI Principles of Accountability & Safety

✔️ The Severity classification for is of Injury

💁 Why is it a Breach?

The incident involves a grave accident caused by an autonomous vehicle, which is an AI system, leading to harm. This fits the definition of an AI incident as it involves the malfunction of an AI system resulting in harm.

Dec24 - AI BREACHES (6)

6 - Worldcoin Ordered to Delete Iris Biometric Data for GDPR Violation

The Briefing

Worldcoin’s “eye for crypto” scheme just hit a major GDPR roadblock 🪙 . The company, which swapped cryptocurrency for your iris scans, now has to delete all that biometric data faster than you can say "privacy breach."👁️ Why? The Bavarian Data Protection Authority and the Spanish Data Protection Agency decided the whole operation was as secure as a paper umbrella in a storm. Operations are now suspended across Europe, proving that even in the crypto world, rules still apply!!

Potential AI Impact!!

✔️ It affects the AI Principles of Accountability & Data Governance

✔️ The Severity classification for is of Non-physical harm

💁 Why is it a Breach?

In this wild AI adventure, technology tried to scan your eyes for crypto—only to end up scanning its way into a major data protection scandal. Turns out, using iris scans for AI projects without proper safeguards is a one-way ticket to violating human rights and tripping over legal obligations. This is a classic AI incident - when tech gets too invasive, it runs straight into a law book and a privacy rights wall!

Reply