- The AI Bulletin

- Posts

- AI Incident Monitor - Oct 2025 List

AI Incident Monitor - Oct 2025 List

Microsoft Teams’ New Location-Tracking Feature Raises Privacy Concerns - ALSO, Critical Prompt Injection Vulnerability Found in OpenAI’s AI-Powered Atlas Browser - and Others

Editor’s Blur 📢😲

Less than 1 min read

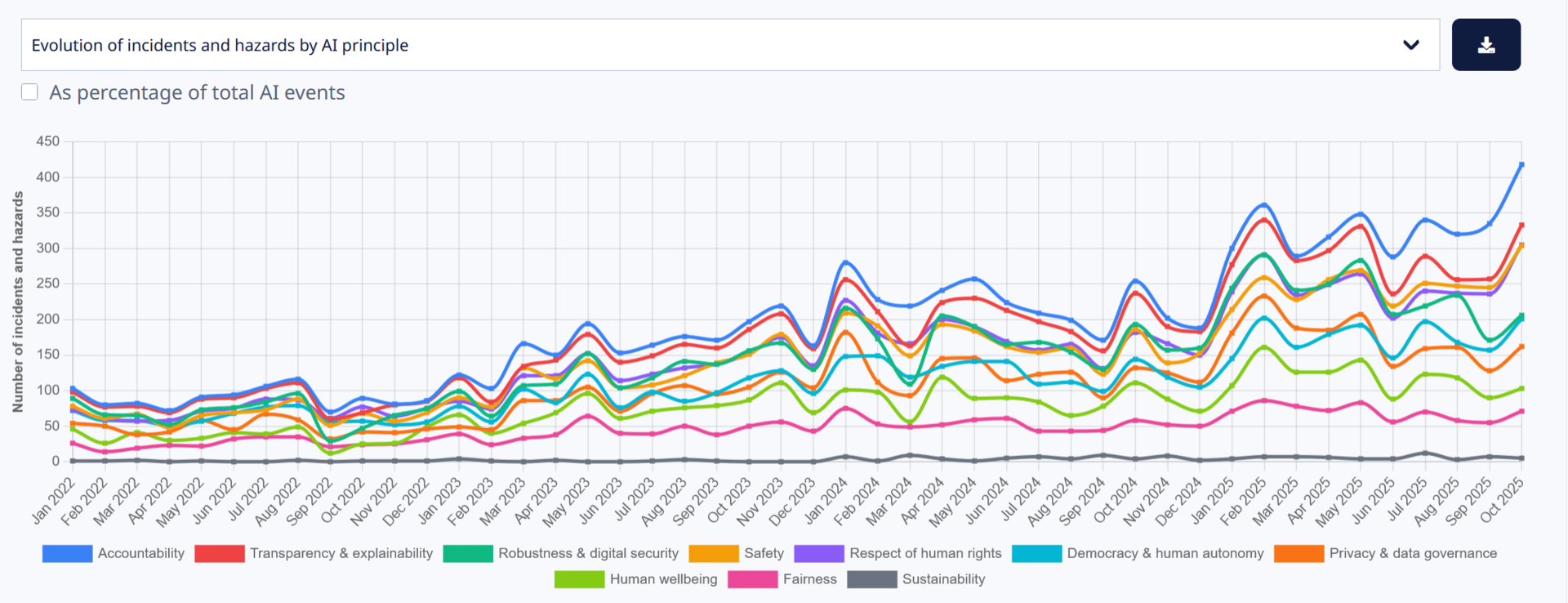

Welcome to the October 2025 AI Incident’s List - As we now, AI laws around the globe are getting their moment in the spotlight, and crafting smart policies will take you more than a lucky guess - it needs facts, forward-thinking, and a global group hug 🤗. Enter the AI Bulletin’s Global AI Incident Monitor (AIM) monthly newsletter, your friendly neighborhood watchdog for AI “gone wild”. AIM keeps tabs, at the end of each month, on global AI mishaps and hazards🤭, serving up juicy insights for company executives, policymakers, tech wizards, and anyone else who’s interested. Over time, AIM will piece together the puzzle of AI risk patterns, helping us all make sense of this unpredictable tech jungle. Think of it as the guidebook to keeping AI both brilliant and well-behaved!

In This Issue: October 25 - Key AI Breaches

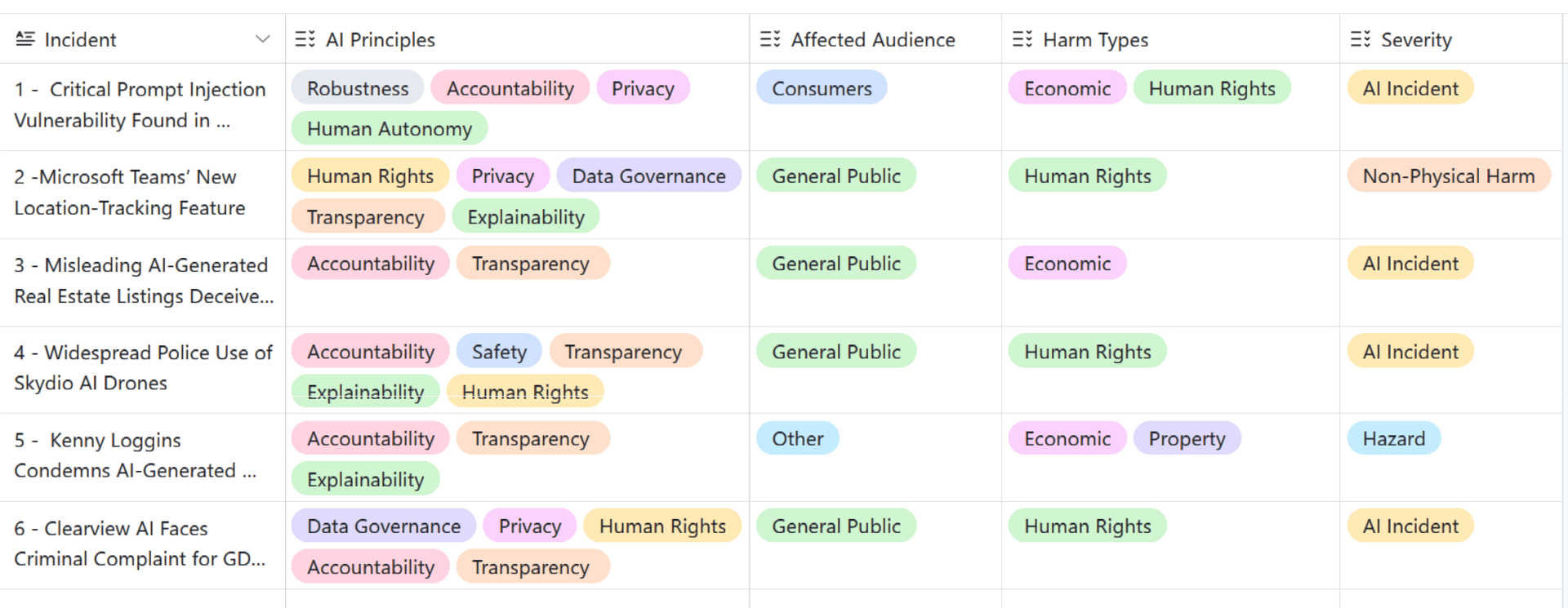

Critical Prompt Injection Vulnerability Found in OpenAI’s AI-Powered Browser

Microsoft Teams’ New Location-Tracking Feature Raises Privacy Concerns

Misleading AI-Generated Real Estate Listings Deceive Home Buyer

Widespread Police Use of Skydio AI Drones Raises Civil Liberty/Privacy Concerns

Kenny Loggins Condemns AI-Generated Trump Video,

Clearview AI Faces Criminal Complaint for GDPR Violations

Total Number of AI Incidents by Hazard - Jan to Oct 2025

AI BREACHES (1)

1- Critical Prompt Injection Vulnerability Found in OpenAI’s AI-Powered Atlas Browser

The Briefing

Security researchers at NeuralTrust uncovered a severe flaw in OpenAI’s new Atlas browser, where the AI-driven omnibox can misinterpret malicious, URL-like inputs as trusted commands. This prompt injection vulnerability enables attackers to bypass safety controls, posing risks of phishing, data theft, and unauthorized actions by the AI agent. The incident demonstrates a realized security risk arising from the misuse and exploitation of an AI system, qualifying it as an AI Incident due to direct harm potential and system compromise.

Potential AI Impact!!

✔️ It affects the AI Principles of Accountability, Privacy & data governance, Respect of human rights, Robustness & digital security, Safety, Democracy & human autonomy

✔️ The Severity is AI Incident

✔️ Harm Type Economic/Property, Human or fundamental rights

💁 Why is it a Breach?

The event involves OpenAI’s AI-powered Atlas browser, which contains a prompt injection vulnerability allowing attackers to trick the system into executing malicious commands. This flaw enables unauthorized actions, including potential data theft and deletion of user files. Since the vulnerability is real and exploitable, it represents realized harm to users’ data security and property. The AI system’s misinterpretation of malicious inputs as legitimate commands directly causes this risk, making it a confirmed AI Incident rather than a hypothetical hazard.

AI BREACHES (2)

2- Microsoft Teams’ New Location-Tracking Feature Raises Privacy Concerns

The Briefing

Microsoft announced an AI-powered feature in Teams that will automatically track and share employees’ real-time workplace locations using Wi-Fi data. While the feature aims to improve coordination and safety, it has sparked concerns about employee privacy and workplace surveillance. As no direct harm has yet occurred, the event is classified as an AI Hazard, representing a credible future risk of privacy violations and misuse of personal data once the feature is deployed.

Potential AI Impact!!

✔️ It affects the AI Principles of Privacy & data governance, Respect of human rights, Transparency & explainability, Democracy & human autonomy, Accountability

✔️ The Severity is AI Hazard

✔️ Harm Type is Human or fundamental rights

💁 Why is it a Breach?

Microsoft’s planned AI-enabled location-tracking feature in Teams will use Wi-Fi data to infer and share employees’ real-time workplace locations. While intended to enhance operational efficiency, the system could plausibly lead to privacy breaches and labor rights violations if misused. As the feature is not yet active and no harm has materialized, the event qualifies as an AI Hazard - a credible potential risk arising from the deployment of AI systems capable of impacting individuals’ rights and workplace autonomy.

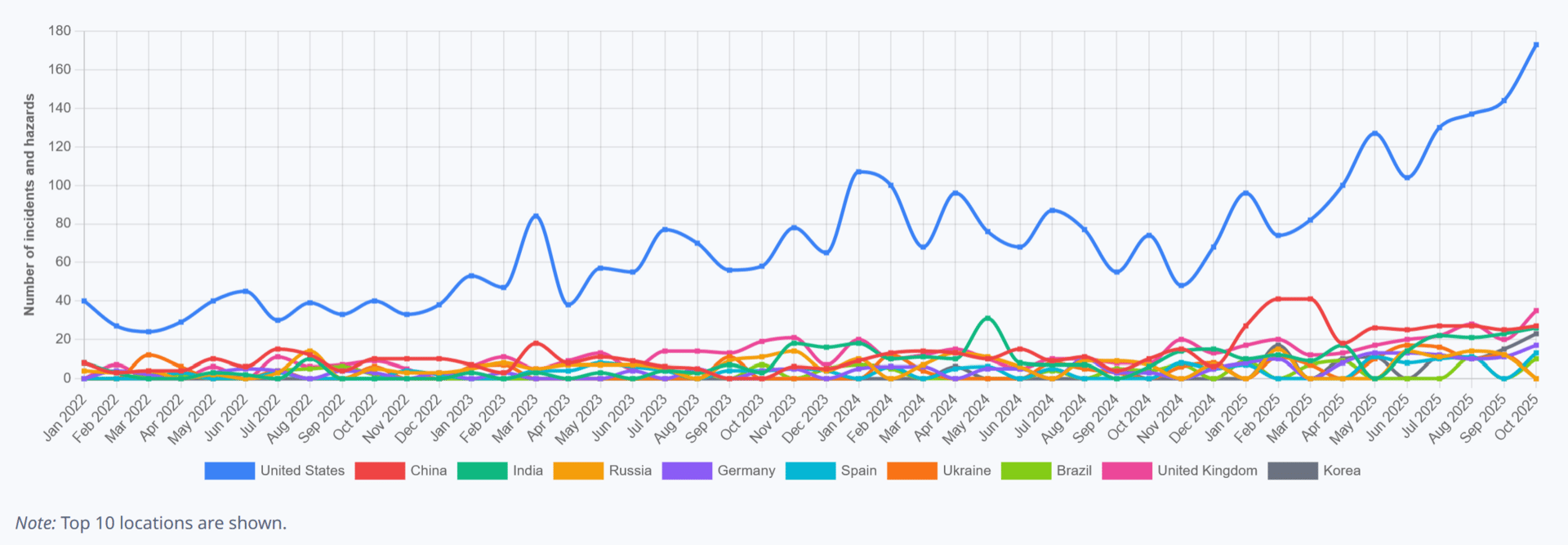

Incidents by Location - Oct 2025

AI BREACHES (3)

3 - Misleading AI-Generated Real Estate Listings Deceive Home Buyer

The Briefing

Real estate agents in the U.S. are increasingly using AI-generated images and videos to market properties, but many listings misrepresent actual property conditions and features. Buyers have reported significant discrepancies between the AI-enhanced listings and reality, resulting in consumer deception and loss of trust. The event demonstrates realized harm caused by the use of AI in misleading advertising, breaching transparency and consumer protection principles. Therefore, it qualifies as an AI Incident due to the direct involvement of AI systems in generating deceptive content that harms buyers and communities.

Potential AI Impact!!

✔️ It affects the AI Principles of Accountability and Transparency

✔️ The Industries affected Real Estate

✔️ Harm Type is Economic, Property

💁 Why is it a Breach?

Real estate agents across the U.S. are increasingly using AI-generated images and videos to market properties, but many of these listings misrepresent actual conditions and features. Buyers have reported discovering significant discrepancies between advertised visuals and real properties, resulting in financial and consumer harm. The misuse of generative AI for deceptive marketing constitutes a realized breach of consumer trust and transparency, fitting the definition of an AI Incident due to direct harm caused by the use of AI systems in misleading commercial practices

AI BREACHES (4)

4 - Widespread Police Use of Skydio AI Drones Raises Civil Liberty and Privacy Concerns

The Briefing

AI-powered Skydio drones, originally used by Israel in military operations, are now deployed by over 800 U.S. law enforcement agencies for autonomous surveillance of civilians and protests. The drones’ AI-driven data collection and tracking capabilities pose serious risks to privacy and civil liberties, particularly concerning mass surveillance and lack of oversight. As these systems are actively in use and causing realized harm to individual rights and freedoms, the event qualifies as an AI Incident, illustrating the societal impact of AI-enabled surveillance technologies on democratic and human right.

Potential AI Impact!!

✔️ It affects the AI Principles of Accountability, Privacy & data governance, Respect of human rights, Democracy & human autonomy

✔️ The Affected Stakeholders General Public

✔️ Harm Type Human or fundamental rights and Public interest

💁 Why is it a Breach?

Their AI-driven monitoring and data collection directly impact privacy, community rights, and civil liberties, representing realized harms rather than potential risks. The widespread and ongoing use of these drones contributes to the normalization of militarized surveillance and erosion of public trust, linking the harms directly to the AI system’s operation. Therefore, this qualifies as an AI Incident, as the AI-enabled surveillance has resulted in tangible societal and human rights impacts.

Total Number of Incidents - Rolling Average to August 2025

AI BREACHES (5)

5 - Kenny Loggins Condemns AI-Generated Trump Video

The Briefing

Singer Kenny Loggins demanded the removal of his song “Danger Zone” from an AI-generated video posted by Donald Trump, which depicted him dumping sludge on protesters. The AI system’s creation of the video used copyrighted music without authorization and combined it with controversial imagery, resulting in reputational damage and intellectual property infringement. The harms are realized and directly linked to the AI-generated content, qualifying this event as an AI Incident.

Potential AI Impact!!

✔️ It affects the AI Principles of Accountability, Transparency & explainability

✔️ The Affected Stakeholders Other

✔️ Harm Type Economic/Property, Reputational

💁 Why is it a Breach?

An AI system was used to generate a video depicting Donald Trump dumping sludge on protesters while incorporating Kenny Loggins’ song “Danger Zone” without authorization. This unauthorized use of copyrighted material constitutes a violation of intellectual property rights, and the video’s content led to reputational and social harm. The harm is realized and directly attributable to the AI system’s outputs, meeting the criteria for an AI Incident despite being limited to intellectual property and reputational impacts rather than physical or critical infrastructure harm.

AI BREACHES (6)

6 - Clearview AI Faces Criminal Complaint for GDPR Violations

The Briefing

Austrian privacy organization noyb filed a criminal complaint against Clearview AI for the illegal collection and processing of billions of images of EU residents to power its facial recognition system, in violation of GDPR. Despite prior fines and bans from European regulators, Clearview AI has reportedly continued its practices, prompting calls for criminal sanctions against executives. The AI system’s operation has directly caused realized harm to privacy rights and legal obligations, qualifying this event as an AI Incident.

Potential AI Impact!!

✔️ It affects the AI Principles of Privacy & data governance, Respect of human rights, Accountability, Transparency & explainability

✔️ The Severity is AI Incident

✔️ Harm Type Human or fundamental rights

💁 Why is it a Breach?

Clearview AI operates a facial recognition AI system that collects and processes biometric data of EU residents without consent, breaching GDPR and privacy rights. This constitutes a violation of fundamental rights and legal obligations, representing realized harm caused directly by the AI system’s use. The criminal complaint filed by noyb, alongside prior regulatory fines, confirms that the AI-enabled practices have already caused harm. Therefore, this event qualifies as an AI Incident, not merely a potential risk or informational update.

Reply