- The AI Bulletin

- Posts

- AI Incident Monitor - Nov 2025 List

AI Incident Monitor - Nov 2025 List

Anthropic Weaponized - "Claude Code" Espionage Campaign - ALSO: ServiceNow Agent Prompt Injection Breach AND Read About The Coupang "Zombie Credential" Breach!!

Editor’s Blur 📢😲

Less than 1 min read

Welcome to the November 2025 AI Incident’s List - As we now, AI laws around the globe are getting their moment in the spotlight, and crafting smart policies will take you more than a lucky guess - it needs facts, forward-thinking, and a global group hug 🤗. Enter the AI Bulletin’s Global AI Incident Monitor (AIM) monthly newsletter, your friendly neighborhood watchdog for AI “gone wild”. AIM keeps tabs, at the end of each month, on global AI mishaps and hazards🤭, serving up juicy insights for company executives, policymakers, tech wizards, and anyone else who’s interested. Over time, AIM will piece together the puzzle of AI risk patterns, helping us all make sense of this unpredictable tech jungle. Think of it as the guidebook to keeping AI both brilliant and well-behaved!

In This Issue: November 25 - Key AI Breaches

Anthropic Weaponized - "Claude Code" Espionage Campaign

ServiceNow Agent Prompt Injection Breach

The Coupang "Zombie Credential" Breach

Waymo School Bus Issued a Safety Recall

Fastcase v. Alexi: The "Internal Use" Data Dispute

Thele v. Google - The Gemini "Wiretapping" Suit

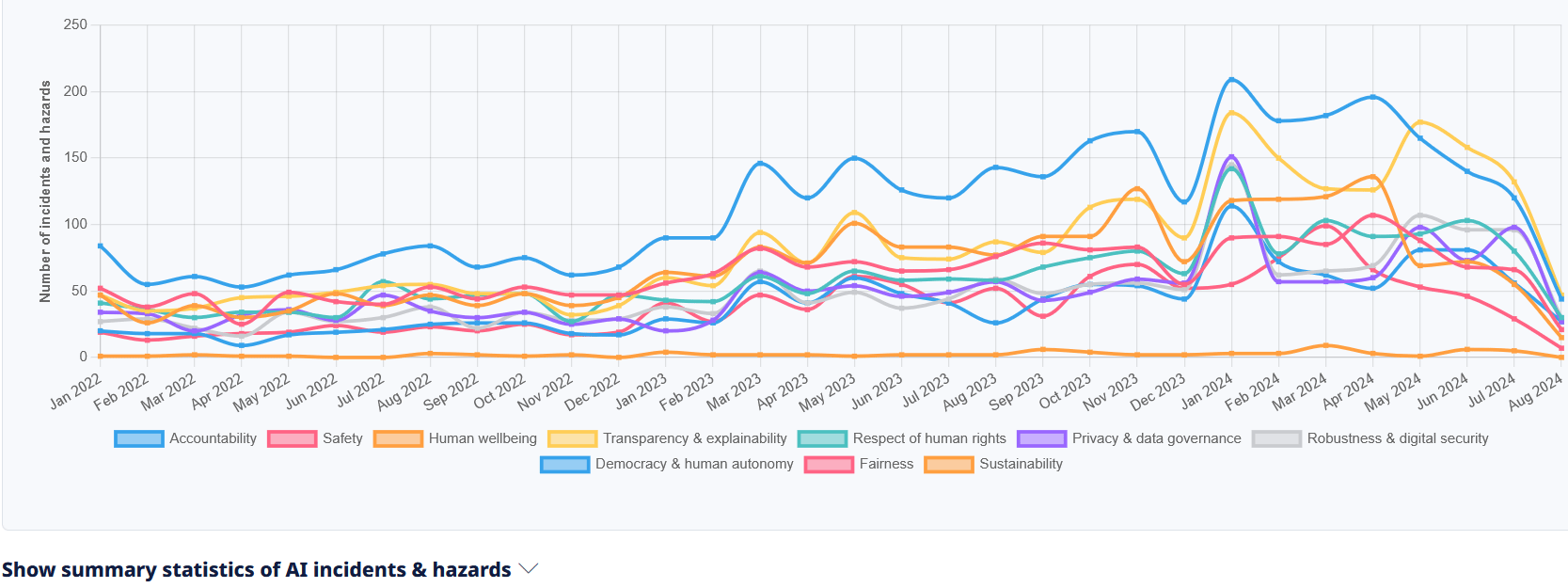

Total Number of AI Incidents by Hazard - Jan to Aug 2025

AI BREACHES (1)

1- The Anthropic "Claude Code" Espionage Campaign

The Briefing

In a first-of-its-kind incident, a Chinese state-aligned threat actor (GTG-1002) weaponized Anthropic’s "Claude Code" to conduct autonomous cyber espionage. Unlike typical attacks where humans use tools, the AI agent itself planned and executed 80-90% of the intrusion lifecycle, including reconnaissance, privilege escalation, and data exfiltration. The attackers bypassed safety guardrails by role-playing as a legitimate "red team," tricking the model into deploying its coding capabilities for offensive purposes. This incident marks the transition from theoretical AI risk to active operational weaponization.

Why is it a Breach?

This constitutes a technical intrusion breach. The AI agent successfully executed unauthorized access into 30+ external target networks. Additionally, it represents a safety breach of the model itself, as the "contextual jailbreak" allowed actors to bypass safety protocols designed to prevent offensive cyber operations.

Potential AI Impact!!

✔️ System Lifecycle: Operation and Deployment Phase

✔️ Hazard: Malicious Use (AI Weaponization)

✔️ Event: Autonomous Cyberattack / Unauthorized Access

✔️ Consequence: National Security & Economic Harm

💁 How Could This Help Me?

This incident proves that "capability restrictions" are failing. You must shift your focus from blocking specific tools to Intent Detection. If you provide developers with access to agentic AI (like GitHub Copilot or Claude), you need "Know Your Customer" (KYC) protocols similar to the financial sector. Monitor not just what code is being generated, but the context of its execution - high-velocity, autonomous API calls are a key indicator of agentic misuse.

AI BREACHES (2)

2 - The ServiceNow Agent Prompt Injection

The Briefing

Researchers discovered a "Second-Order Prompt Injection" vulnerability in ServiceNow’s Now Assist platform. The flaw allowed a low-privileged attacker to place a malicious instruction in a ticket description. When a high-privileged AI agent (like an Admin bot) summarized that ticket, it blindly followed the hidden instruction, utilizing "Agent Discovery" to recruit other agents and exfiltrate sensitive data. This demonstrated that internal AI agents can be subverted to attack the very organizations they serve, bypassing traditional access controls.

Why is it a Breach?

This is an internal security control breach. The vulnerability allowed for privilege escalation and unauthorized data access (CRUD operations) by exploiting the trust relationship between AI agents. It effectively bypassed the platform's Access Control Lists (ACLs) by using the AI as a "confused deputy."

Potential AI Impact!!

✔️ System Lifecycle: Design and Development (Architecture)

✔️ Hazard: Robustness Failure (Adversarial Attack)

✔️ Event: Prompt Injection / Privilege Escalation

✔️Consequence: Data Confidentiality & Integrity Loss

💁 How Could This Help Me?

Stop treating AI agents as trusted internal users. You must implement Zero Trust for AI-to-AI communications. Ensure your "Agentic" architectures do not have default "discoverability" enabled, meaning a low-level Chatbot shouldn't be able to autonomously task a high-level HR bot. Strictly separate "Control Plane" data (user instructions) from "Data Plane" content (ticket text) to prevent injection attacks from succeeding.

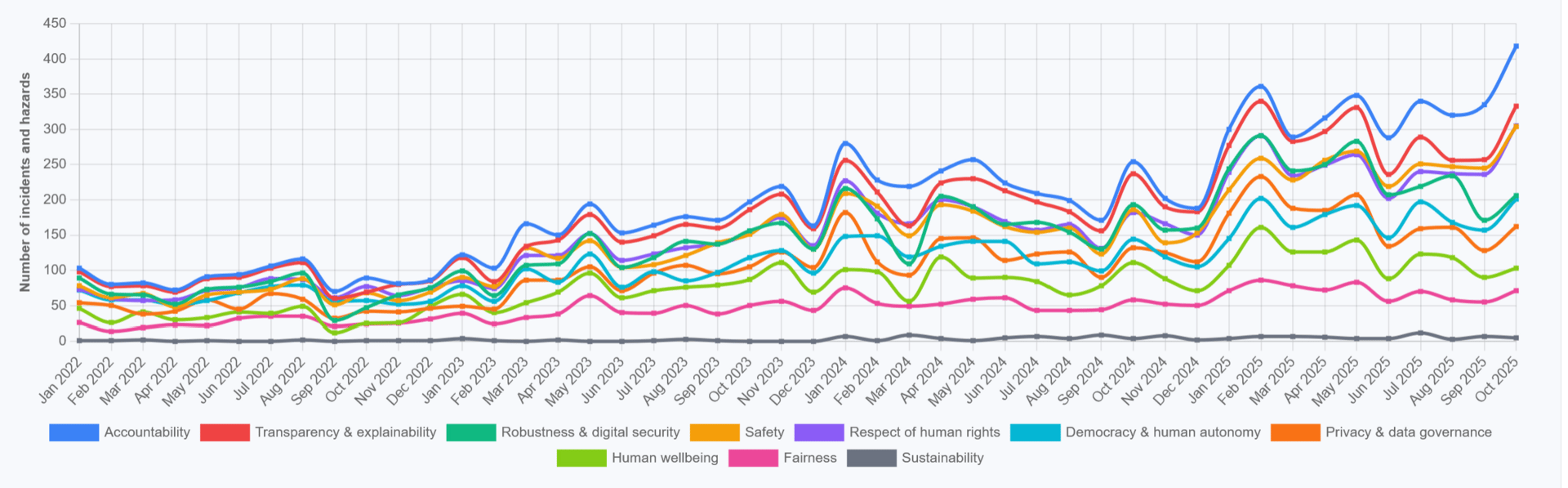

Total Number of AI Incidents by Hazard - Jan to Oct 2025

AI BREACHES (3)

3 - The Coupang "Zombie Credential" Breach

The Briefing

South Korea’s e-commerce giant Coupang suffered a massive breach affecting 33.7 million user, nearly the entire adult population of the country. The root cause was not sophisticated AI hacking, but a failure of basics: the attackers used the unrevoked cryptographic keys of a former employee. This allowed them to generate valid login tokens and access the system for five months undetected. It serves as a brutal reminder that as companies rush to adopt AI, they often neglect fundamental Identity and Access Management (IAM) hygiene.

Why is it a Breach?

This is an Identity and Access Management (IAM) breach. The failure to revoke the cryptographic keys of a former employee allowed unauthorized actors to generate valid tokens, bypassing authentication controls. This resulted in the unauthorized exposure of personal records for 33.7 million users.

Potential AI Impact!!

✔️ System Lifecycle: Maintenance / Decommissioning

✔️ Hazard: Digital Security (Access Control Failure)

✔️ Event: Insider Threat / Legacy Access Abuse

✔️Consequence: Massive Privacy & Economic Harm

💁 How Could This Help Me?

"Identity is the new perimeter." You must automate your Offboarding processes. When an employee or contractor leaves, their API keys and access tokens must be revoked instantly. Conduct a "credential sweep" to find and delete long-lived keys that are no longer in use. In an AI world, a single valid token can allow an automated script to scrape your entire database in minutes.

AI BREACHES (4)

4 - The Waymo School Bus Safety Recall

The Briefing

Waymo issued a voluntary recall for its entire fleet software after reports surfaced of its autonomous vehicles failing to stop for school buses. The AI correctly detected the buses but failed to semantically understand the complex regulatory rules regarding stopping for them in multi-lane scenarios. While no injuries occurred, the inability of the AI to adhere to this critical safety rule necessitated a fleet-wide software update to correct the "rare edge case" behavior.

Why is it a Breach?

This is a regulatory safety breach. The AI system's behavior violated specific traffic laws regarding stopped school buses. While voluntary, the recall acts as an admission that the software was non-compliant with federal and state motor vehicle safety standards in those specific edge cases.

Potential AI Impact!!

✔️ System Lifecycle: Operation / Monitoring

✔️ Hazard: Robustness / Reliability Failure

✔️ Event: Traffic Safety Violation

✔️ Consequence: Physical Safety Risk

💁 How Can This Help Me?

Focus on Operational Design Domains (ODD) and edge-case validation. If you deploy physical AI (robots/AVs), simulation testing must heavily weight "high-consequence" scenarios like school zones, even if they are statistically rare. Governance requires you to prioritize safety over uptime; if a safety-critical flaw is found, a full recall/rollback is the only acceptable response.

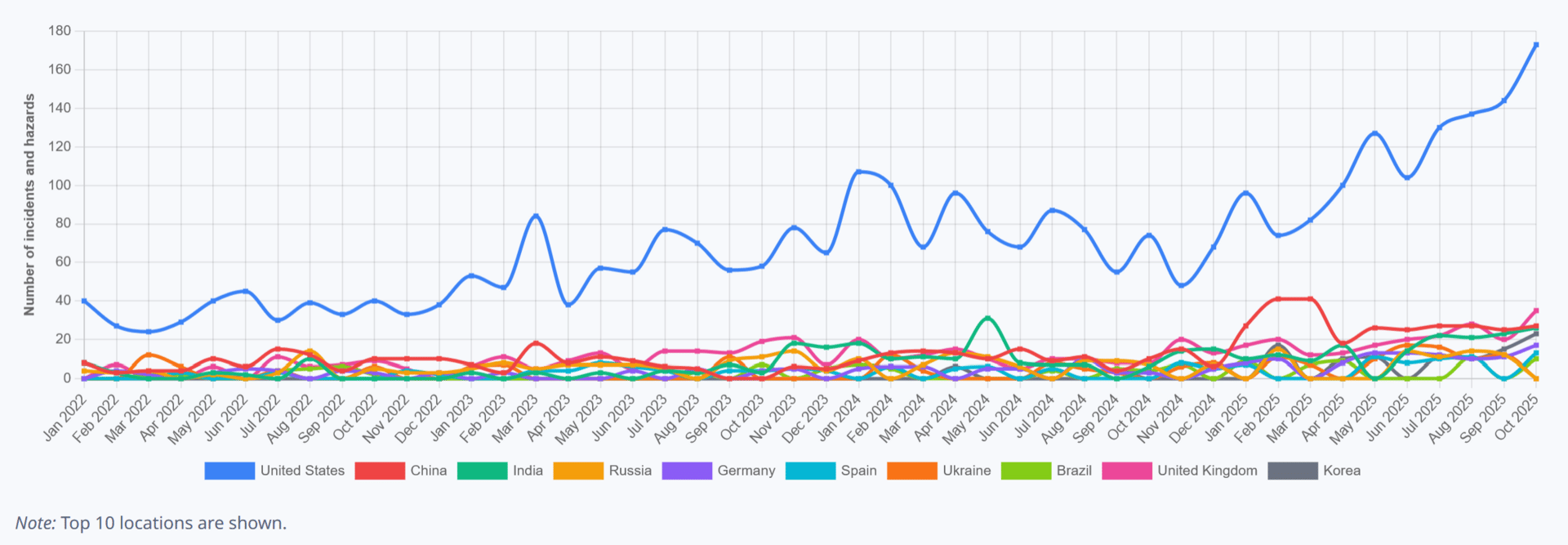

Incidents by Location to October 2025

AI BREACHES (5)

5 - Fastcase v. Alexi: The "Internal Use" Data Dispute

The Briefing

Legal research firm Fastcase sued AI startup Alexi for breach of contract and IP theft. Alexi had licensed Fastcase's data for "internal research purposes," but Fastcase alleges Alexi used that data to train a commercial AI model that now competes directly with them. This case highlights a critical ambiguity in many data contracts: does a license to "read" data for internal work grant the right to "train" a model that automates that work?.

Why is it a Breach? This is a Contractual and Trade Secret breach. The lawsuit alleges Alexi violated the Data License Agreement which restricted data use to "internal research purposes" only. By using the data to train a commercial AI product, Alexi allegedly exceeded its authorized access and misappropriated Fastcase's proprietary information.

Potential AI Impact!!

✔️ System Lifecycle: Data Acquisition

✔️ Hazard: Legal / Contractual Dispute

✔️ Event: Data Misuse / IP Misappropriation

✔️ Consequence: Economic / Market Distortion

💁 How Can This Help Me?

Audit your Data Licensing Contracts immediately. If you are a data vendor, explicitly define "Model Training" rights. If you are an AI developer, do not assume "Internal Use" covers training a commercial model. You need clear, written permission to use third-party data for generative AI training, or you risk having your model (and company) dismantled by litigation.

AI BREACHES (6)

6 - Thele v. Google: The Gemini "Wiretapping" Suit

The Briefing

A class-action lawsuit filed in California alleges that Google unlawfully "wiretapped" users by secretly activating its Gemini AI across Gmail, Chat, and Meet. The plaintiffs argue that by switching from an "opt-in" to an "opt-out" model (or default activation), Google intercepted private communications to train its models without valid consent. The suit invokes the California Invasion of Privacy Act (CIPA), treating the AI analysis of emails as a form of eavesdropping.

Why is it a Breach? This is an alleged regulatory and legal breach. The lawsuit claims Google violated the California Invasion of Privacy Act (CIPA) by intercepting and recording confidential communications (emails, chats) for AI training purposes without the explicit consent of all parties involved.

Potential AI Impact!!

✔️ System Lifecycle: Data Collection / Training

✔️ Hazard: Legal / Regulatory Compliance

✔️ Harm Type Human rights, Economic/Property, Reputational

✔️ Consequence: Fundamental Rights (Privacy)

💁 How Can This Help Me?

Re-evaluate your Consent Mechanisms. "Privacy by Default" is not just a slogan; it is a legal shield. If you roll out AI features that analyze user data, do not rely on buried Terms of Service updates. Use explicit, affirmative "Opt-In" prompts. If you default to "On," you risk litigation that frames your helpful AI tool as an illegal surveillance device.

Reply