- The AI Bulletin

- Posts

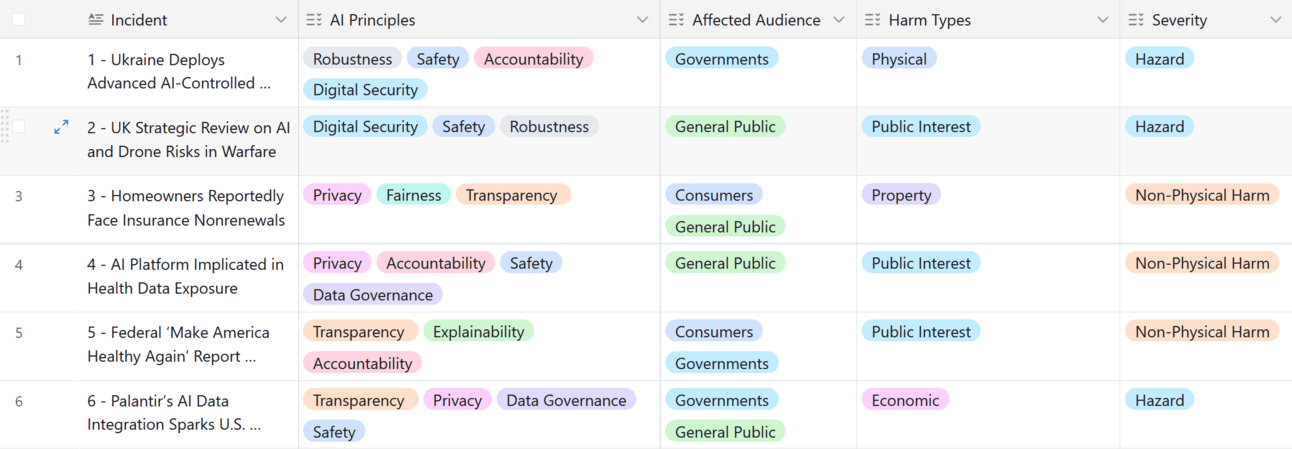

- AI Incident Monitor - May 2025 List

AI Incident Monitor - May 2025 List

Top AI Regulatory Updates - Read Ukraine Deploys Advanced AI-Controlled Mothership, Homeowners Reportedly Face Insurance Nonrenewals plus other breaches!

Editor’s Blur 📢😲

Less than 1 min read

Welcome to the May 2025 AI Incident’s List - As we now, AI laws around the globe are getting their moment in the spotlight, and crafting smart policies will take you more than a lucky guess—it needs facts, forward-thinking, and a global group hug 🤗. Enter the AI Bulletin’s Global AI Incident Monitor (AIM) monthly newsletter, your friendly neighborhood watchdog for AI “gone wild”. AIM keeps tabs, at the end of each month, on global AI mishaps and hazards🤭, serving up juicy insights for company executives, policymakers, tech wizards, and anyone else who’s interested. Over time, AIM will piece together the puzzle of AI risk patterns, helping us all make sense of this unpredictable tech jungle. Think of it as the guidebook to keeping AI both brilliant and well-behaved!

In This Issue: May 25 - Key AI Breaches

Ukraine Deploys Advanced AI-Controlled Mothership Drone in War Effort

UK Strategic Review Highlights Emerging AI and Drone Risks in Warfare

Homeowners Reportedly Face Insurance Nonrenewals

AI Platform Implicated in Health Data Exposure Affecting 483,000 Patients

Federal ‘Make America Healthy Again’ Report Released Amid Citation Issues

Palantir’s AI Data Integration Sparks U.S. Surveillance Concerns

Total Number of AI Incidents by Country

AI BREACHES (1)

Gif by Octal7

1 - Ukraine Deploys Advanced AI-Controlled Mothership Drone in War Effort

The Briefing

Ukraine has introduced its AI-powered "mother drone," marking a significant advancement in autonomous warfare technology. Developed by the defense tech cluster Brave1, this drone system can deploy two AI-guided first-person view (FPV) strike drones up to 300 kilometers (186 miles) behind enemy lines. Utilizing visual-inertial navigation with cameras and LiDAR, these drones operate without GPS, autonomously identifying and engaging high-value targets such as aircraft, air defense systems, and critical infrastructure.

✔️ It affects the AI Principles of Safety, Accountability, Robustness & digital security and affects Governments

✔️ The Severity classification is Physical and Hazard

💁 Why is it a Breach?

The deployment of an AI-enabled drone system capable of autonomously identifying and attacking targets, including critical infrastructure is dangerous activity. This use of AI for lethal targeting without direct human oversight raises significant concerns under established AI risk frameworks. Specifically, it presents a credible risk of causing harm to people, property, or the environment - meeting the criteria of an AI Hazard. Given its potential to operate independently and inflict damage, the system falls within the definition of an AI Incident as outlined in the OECD AI Incident Taxonomy, indicating a serious breach of responsible AI deployment standards.

Curious About This Update? Click Here to Dive In Further!

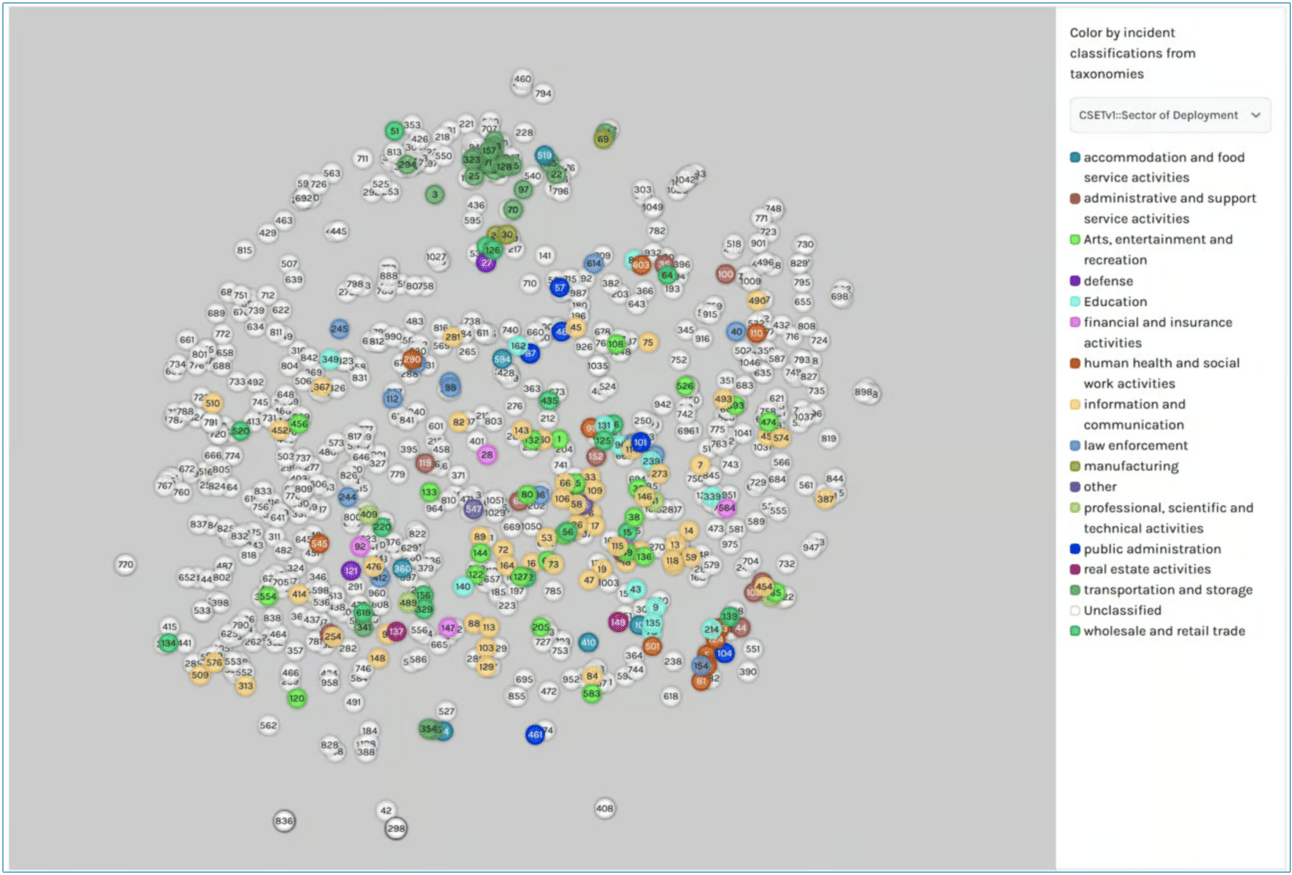

Summary of AI Incidents & Impact - Classification by Sector of Deployment (May 25)

AI BREACHES (2)

Gif by pudgypenguin

2 - UK Strategic Review Highlights Emerging AI and Drone Risks in Warfare

The Briefing

The UK government is dropping a 130-page strategic and may now need to be re-written! - the theme is: AI, drones, and future warfare aren’t science fiction anymore. Borrowing real-world plot twists from the Ukraine conflict - which is now a real case, the report warns that tech-fueled battlefields are no longer hypothetical. It spotlights the usual geopolitical headliners - Russia, China, Iran, and North Korea as likely players in this high-tech reboot of global security. Bottom line: the rules of war are being rewritten by code, not just generals, and the UK wants everyone to pay attention, preferably before the drones do.

✔️ It affects the AI Principles of Safety, Accountability, Robustness & digital security and affects Governments

✔️ The Severity classification is Physical and Hazard

💁 Why is it a Breach?

The UK’s latest defense review was written before the latest Ukraine attack - and sounds the alarm on AI, drones, and high-tech warfare, pointing fingers - politely, of course at Russia and China as likely future disruptors. Although at time of writing, it was all still in the “what could go wrong” stage. No targeted AI had launched missiles or hijacked drones - yet. What we’re looking at is a classic AI hazard: credible potential for future harm… Think of it as a fire drill, not the fire.

AI BREACHES (3)

3 - Homeowners Reportedly Face Insurance Nonrenewals

The Briefing

In Texas, homeowners, like Tracy Gartenmann of Austin, have faced insurance nonrenewals after insurers allegedly relied on AI-analyzed aerial imagery to evaluate property risks. In Gartenmann’s case, Travelers pointed to overhanging trees identified from aerial photos as the reason for nonrenewal. Other homeowners have contested similar decisions, particularly involving roof assessments. Major insurers, including State Farm and Nationwide, are reportedly using third-party vendors such as CAPE Analytics and Nearmap to power these AI-driven evaluations - sparking concerns over accuracy, transparency, and accountability in automated insurance decisions.

✔️It affects the AI Principles of Transparency, Fairness and Privacy.

✔️ The Severity classification is Non-Physical

✔️ Affected Audience are Consumers

💁 Why is it a Breach?

Alleged implicated AI systems: CAPE roof risk scoring system , Nearmap aerial analysis tools , Algorithmic roof condition classification and AI-assisted aerial imagery risk assessment. The investigation was published on 05/13/2025 and references multiple incidents from 2023 onward. The specific case of Tracy Gartenmann occurred in January 2025, while other cases involving State Farm and Nationwide span from 2023 to early 2025, based on homeowner complaints and regulatory filings, according to the reporting. See also Incident 1082 for a variant of this incident that occurred in March 2024 in California.

AI BREACHES (4)

4 - AI Platform Implicated in Health Data Exposure Affecting 483,000 Patients

The Briefing

An AI-powered platform run by Serviceaide has landed in hot water after exposing sensitive health data from Catholic Health—impacting a staggering 483,000 patients. The culprit? A misconfigured Elasticsearch database buried inside the company’s agentic AI infrastructure. The leak included everything from medical records and insurance info to login credentials—basically, a hacker’s wishlist. While there’s no evidence of misuse (yet), the sheer sensitivity of the data has triggered regulatory alarms and launched legal investigations. It’s a stark reminder that even smart systems can make dangerous mistakes when not properly locked down.

✔️It affects the AI Principles of Data Governance, Accountability, Safety and Privacy.

✔️ The Severity classification is Non-Physical

✔️ Affected Audience are Consumers and General Public

💁 Why is it a Breach?

According to Serviceaide’s official notice (available here) and third-party reports, the company discovered on November 15, 2024, that Catholic Health’s Elasticsearch database had been unintentionally exposed. The data was accessible from September 19 to November 5, 2024, nearly seven weeks. After conducting a lengthy investigation and reviewing the scope of the breach, Serviceaide reported the incident to the U.S. Department of Health and Human Services on May 9, 2025, which is now considered the official incident date. Public announcements and notifications to affected patients began shortly thereafter.

AI BREACHES (5)

Gif by franceinfo

5 - Federal ‘Make America Healthy Again’ Report Released Amid Citation Issues

The Briefing

The federal "Make America Healthy Again" (MAHA) report, released under HHS Secretary Robert F. Kennedy Jr., featured hundreds of citations, some of which turned out to be nonexistent or inaccurate. Analysts spotted red flags typical of AI-generated content, including repeated references, fake studies, and suspicious URLs containing “oaicite,” hinting that tools like ChatGPT may have been used in compiling the report.

✔️It affects the AI Principles of Transparency, Accountability, and Explainability.

✔️ The Severity classification is Non-Physical

✔️ Affected Audience are Government, General Public

💁 Why is it a Breach?

The presence of nonexistent or erroneous citations and AI-generated content in the MAHA report undermines the integrity and reliability of an official federal document. This raises serious concerns about transparency, accountability, and the responsible use of AI in government reporting. Such inaccuracies could mislead policymakers, the public, and stakeholders, potentially resulting in flawed health decisions or policies. Therefore, this situation represents an AI-related breach of trust and governance standards, highlighting the risks when AI tools are used without rigorous human oversight and verification.

AI BREACHES (6)

Gif by adultswim on Giphy

The Briefing

Several reports reveal that Palantir, the AI-focused contractor connected to Peter Thiel, was commissioned by the Trump administration to consolidate data from multiple federal agencies into digital IDs for Americans. This move sparks serious worries about privacy breaches and potential misuse, underscoring a growing AI-related risk that demands close scrutiny.

✔️It affects the AI Principles of Transparency, Privacy, Data Governance, Safety.

✔️ The Severity classification is Hazard

✔️ Affected Audience are Government, Security, Defense and IT Infra/Hosting

💁 Why is it a Breach?

The expansion of Palantir’s AI-driven data analysis to compile information on Americans poses a significant risk of enabling mass surveillance and targeting of specific groups, raising serious concerns about potential human rights violations and privacy breaches. Although no direct harm has yet been documented, the capability of the AI system to process and act on vast amounts of personal data without adequate oversight creates a clear AI Hazard. This situation could realistically escalate into an AI Incident involving unlawful surveillance or misuse of personal information, representing a breach of ethical standards and legal protections around privacy and human rights.

Reply