- The AI Bulletin

- Posts

- AI Incident Monitor - Jul 2025 List

AI Incident Monitor - Jul 2025 List

Unitree Humanoid Robot Malfunctions!! - ALSO: China Presses Nvidia Over Alleged Backdoor Risks

Editor’s Blur 📢😲

Less than 1 min read

Welcome to the July 2025 AI Incident’s List - As we now, AI laws around the globe are getting their moment in the spotlight, and crafting smart policies will take you more than a lucky guess - it needs facts, forward-thinking, and a global group hug 🤗. Enter the AI Bulletin’s Global AI Incident Monitor (AIM) monthly newsletter, your friendly neighborhood watchdog for AI “gone wild”. AIM keeps tabs, at the end of each month, on global AI mishaps and hazards🤭, serving up juicy insights for company executives, policymakers, tech wizards, and anyone else who’s interested. Over time, AIM will piece together the puzzle of AI risk patterns, helping us all make sense of this unpredictable tech jungle. Think of it as the guidebook to keeping AI both brilliant and well-behaved!

In This Issue: July 25 - Key AI Breaches

OpenAI CEO Cautions on ChatGPT Agent, Your Data and Sensitive Data Risks

Atlassian Cuts 150 Jobs as AI Replaces Support and Operations Roles

OpenAI Pulls ChatGPT Sharing Feature After Private Chats Surface on Google

China Presses Nvidia Over Alleged Backdoor Risks in H20 AI Chip

Constitutional Challenge Filed Against Palantir’s AI Policing Software in Bavaria

Unitree Humanoid Robot Malfunction Sparks Safety Concerns After Lab Accident

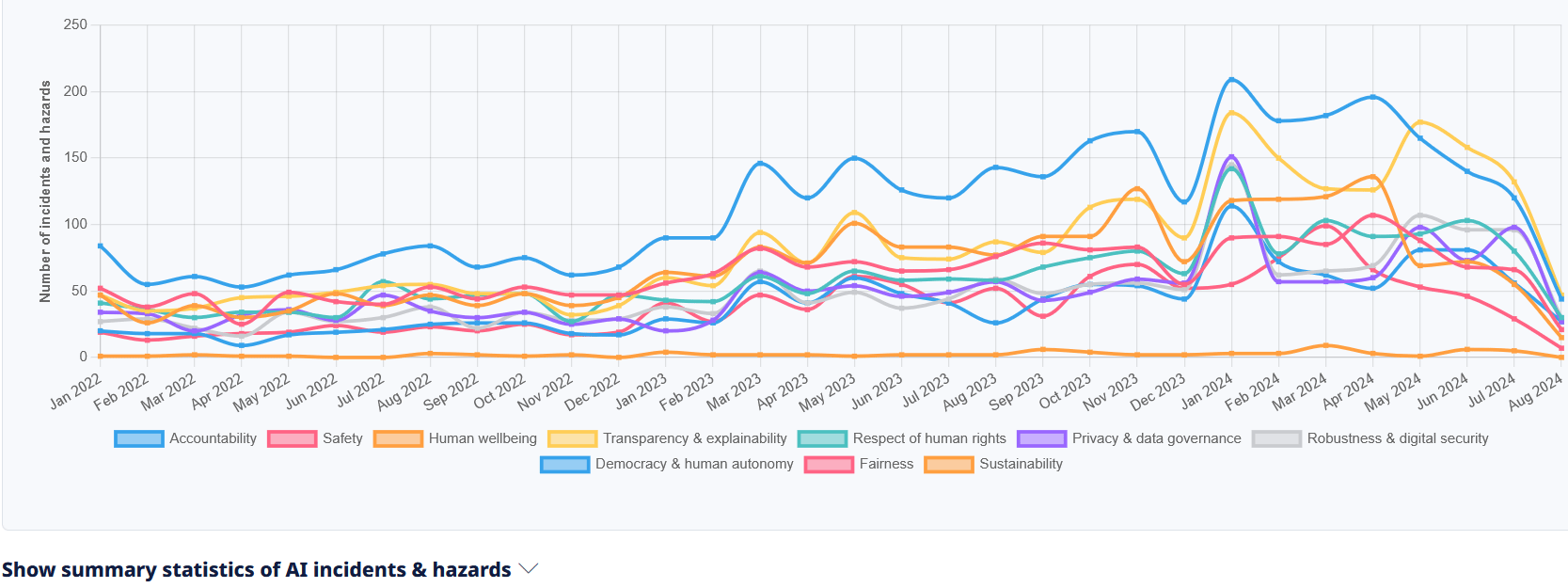

Total Number of AI Incidents by Hazard - Jan to Aug 2025

AI BREACHES (1)

1- OpenAI CEO Cautions on ChatGPT Agent, Your Data and Sensitive Data Risks

The Briefing

OpenAI CEO Sam Altman has raised concerns over the risks of giving ChatGPT Agent broad access to personal data - particularly email accounts. He warned that malicious actors could exploit the system to extract sensitive information, urging users to be cautious. Altman advised limiting permissions and avoiding the use of ChatGPT Agent for high-stakes or personal data until stronger safeguards are in place.

Potential AI Impact!!,

✔️ It affects the AI Principles of Privacy & Data Governance, Safety, Accountability

✔️ The Severity is Hazard

✔️ Harm Type Psychological, Economic/Property and Human rights

💁 Why is it a Breach?

It involves an AI system (ChatGPT Agent) and potential misuse. The warnings from Sam Altman about the agent being tricked into revealing sensitive information indicate a credible risk of harm (privacy violations) that could plausibly occur. However, note that he has not describe any realized harm or incident; it is a cautionary advisory about potential vulnerabilities and risks.

AI BREACHES (2)

2 - Atlassian Cuts 150 Jobs as AI Replaces Support and Operations Roles

The Briefing

Atlassian has announced the layoff of 150 employees, mainly in support and operations, as part of a shift toward AI-driven automation. The move, delivered in a pre-recorded message from CEO Mike Cannon-Brookes, underscores how AI adoption is directly reshaping workforce structures - boosting efficiency but raising concerns about the human cost of automation.

Potential AI Impact!!

✔️ It affects the AI Principles of Accountability, Human wellbeing, Fairness, Transparency & explainability

✔️ The Severity is of Non-physical harm

✔️ Harm Type is Economic/Property, Psychological

💁 Why is it a Breach?

Around 150 jobs were replaced by AI, indicating the use of an AI system in the workforce reduction. The harm is realized as employees lose their jobs, which is a violation of labor rights. The CEO's communication method and the environmental commentary are contextual but do not negate the direct harm caused by AI replacing human jobs. Hence, this is an AI Incident involving harm to labor rights due to AI use.

AI BREACHES (3)

3 - OpenAI Pulls ChatGPT Sharing Feature After Private Chats Surface on Google

The Briefing

OpenAI has discontinued its ChatGPT sharing feature after reports revealed that private user conversations - including personal information - were being indexed by Google and other search engines. The exposure sparked public backlash as thousands of chats became searchable online. In response, OpenAI swiftly removed the feature to prevent further privacy risks and data leaks.

Potential AI Impact!!

✔️ It affects the AI Principles of Privacy & data governance, Accountability, Transparency & explainability

✔️ The Severity is Non-physical Harm

✔️ Harm Type is Psychological, Economic/Property, Reputational, Human rights

💁 Why is it a Breach?

The event involves an AI system (ChatGPT) whose outputs (user chats) are shared and then indexed by a search engine, leading to exposure of private and sensitive information. This exposure constitutes a violation of privacy rights, a form of harm to individuals. The harm is directly linked to the use of the AI system and the sharing mechanism that makes the data publicly accessible. The article highlights that users are often unaware that sharing a link makes their chats public and searchable, which indicates a failure in user understanding and possibly in the system's design or communication. This meets the criteria for an AI Incident as the AI system's use has directly led to harm (privacy violations).

AI BREACHES (4)

4 - China Presses Nvidia Over Alleged Backdoor Risks in H20 AI Chip

The Briefing

Chinese regulators have raised security concerns about Nvidia’s H20 AI chip, recently cleared for export to China. Authorities claim the chip may contain hidden backdoors that could allow unauthorized access, location tracking, or even remote shutdown. Nvidia has denied the allegations, but officials are demanding proof to safeguard user data and national network security.

Potential AI Impact!!

✔️ It affects the AI Principles of Privacy & data governance, Robustness & digital security, Accountability, Transparency & explainability

✔️ The Severity Hazard

✔️ Harm Type Public interest, Economic/Property, Reputational

💁 Why is it a Breach?

The Nvidia H20 chip is an AI system component. The reported backdoor security vulnerability poses a credible risk of harm to data safety and user privacy, which aligns with potential violations of rights and harm to communities. The Chinese authorities' actions to summon Nvidia for explanations and evidence indicate concern over these risks. However, the article does not report any actual harm or incident caused by the chip's malfunction or use, only the identification of a security risk and regulatory scrutiny. Hence, this qualifies as an AI Hazard, reflecting plausible future harm rather than a realized AI Incident.

Total Number of Incidents - Rolling Average to August 2025

AI BREACHES (5)

5 - Constitutional Challenge Filed Against Palantir’s AI Policing Software in Bavaria

The Briefing

The Society for Civil Rights (GFF) has filed a constitutional complaint over the Bavarian police’s use of Palantir’s AI-powered data analysis system. Critics argue the platform processes vast amounts of personal data - including information on non-suspects -without sufficient safeguards, violating privacy, data protection, and telecommunications secrecy. The case highlights the growing legal and ethical debate over AI in law enforcement.

Potential AI Impact!!

✔️ It affects the AI Principles of Privacy & data governance, Respect of human rights, Accountability, Transparency & explainability

✔️ The Severity is Non-Physical Harm

✔️ Harm Type Human rights, Public interest

💁 Why is it a Breach?

The Palantir software is an AI system used by the police to analyze and link large datasets for criminal investigations. The complaint alleges that its use violates constitutional rights, including data privacy and telecommunications secrecy, affecting individuals who are not suspects. This constitutes a violation of fundamental rights (harm category c) directly linked to the AI system's use. Therefore, this event qualifies as an AI Incident because the AI system's use has directly led to alleged harm in terms of rights violations, prompting legal action at the constitutional court level.

AI BREACHES (6)

6 - Unitree Humanoid Robot Malfunction Sparks Safety Concerns After Lab Accident

The Briefing

A Unitree H1 humanoid robot malfunctioned during testing, thrashing uncontrollably while suspended from a crane. The failure caused major equipment damage—including the crane’s collapse—and narrowly avoided injuring nearby technicians. The incident raises fresh concerns about the safety, reliability, and risk management of advanced AI-driven robotics.

Potential AI Impact!!

✔️ It affects the AI Principles of Safety, Robustness & digital security, Accountability

✔️ The Industries affected Robots, sensors, IT hardware, IT infrastructure and hosting

✔️ Harm Type Physical, Economic/Property

💁 Why is it a Breach?

The Unitree H1 humanoid robot is an AI system designed for autonomous or semi-autonomous dynamic movement. The violent thrashing and crash during testing, which nearly caused injury to technicians, constitutes a malfunction during use that directly endangered human safety. This fits the definition of an AI Incident as the AI system's malfunction directly led to a safety hazard with plausible harm to people. The incident is not merely a potential hazard but an actual event with realized risk, thus qualifying as an AI Incident rather than an AI Hazard or Complementary Information. The lack of reported injury does not negate the direct risk and near-miss nature of the event.

Reply