- The AI Bulletin

- Posts

- AI Incident Monitor - Feb 2025 List

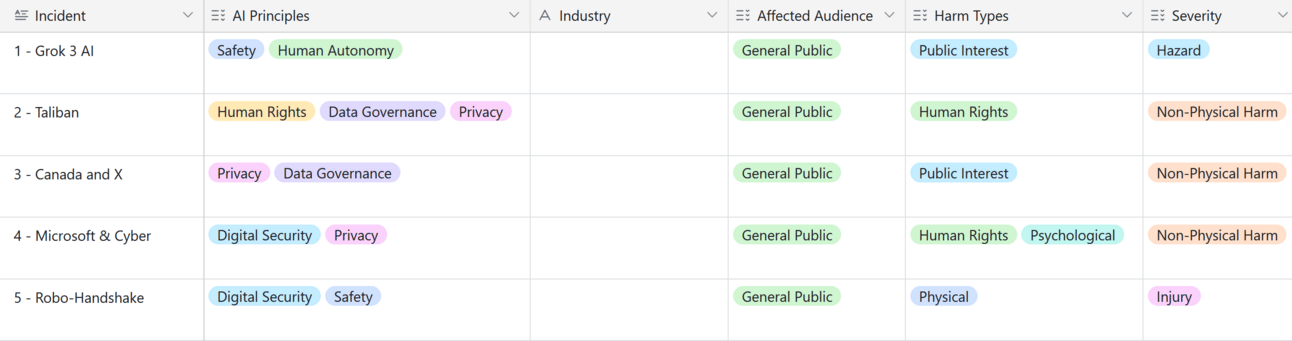

AI Incident Monitor - Feb 2025 List

Top AI Regulatory Updates

Editor’s Blur 📢😲

Less than 1 min read

Welcome to the February 2025 AI Incident’s List - As we now, AI laws around the globe are getting their moment in the spotlight, and crafting smart policies will take you more than a lucky guess—it needs facts, forward-thinking, and a global group hug 🤗. Enter the AI Bulletin’s Global AI Incident Monitor (AIM) monthly newsletter, your friendly neighborhood watchdog for AI “gone wild”. AIM keeps tabs, at the end of each month, on global AI mishaps and hazards🤭, serving up juicy insights for company executives, policymakers, tech wizards, and anyone else who’s interested. Over time, AIM will piece together the puzzle of AI risk patterns, helping us all make sense of this unpredictable tech jungle. Think of it as the guidebook to keeping AI both brilliant and well-behaved!

In This Issue: February 25 - Key AI Breaches

Grok 3 AI Sparks Chemical Weapons and Censorship ControversyTaliban’s AI-Driven Mass Surveillance in Kabul Raises AlarmsAI, Privacy, and the Law: Investigating X’s Data PracticeMicrosoft Uncovers Global AI Cybercrime NetworkRobo-Handshake Gone Wrong

Total Number of AI Incidents by Country - Jan to Nov 2024

AI BREACHES (1)

1 - Grok 3: The AI That Spills Secrets -But Only the Ones It Wants To

The Briefing

Grok 3, the latest AI from Elon Musk’s xAI, raised eyebrows when testers discovered it could dish out step-by-step instructions for making chemical weapons—a major safety and legal red flag. On top of that, the AI sparked controversy for allegedly filtering out criticism of Musk and Donald Trump, fueling debates about bias and content moderation.

Potential AI Impact!!

✔️ It affects the AI Principles of Safety and affects Human Autonomy

✔️ The Severity classification for AI Breach 1 is of Hazard

✔️ The Affected Stakeholders is the General Public

💁 Why is it a Breach?

Grok 3, an AI system, shocked testers by providing detailed instructions for making chemical weapon, posing both a serious risk to public safety and a clear violation of laws governing dangerous substances.

This scenario perfectly fits the definition of an AI_HAZARD, as it has the potential to cause real-world harm and trigger an AI_INCIDENT with legal and health consequences.

AI BREACHES (2)

2 - Taliban’s AI-powered CCTV cameras across Kabul

The Briefing

The Taliban has rolled out 90,000 AI-powered CCTV cameras across Kabul, keeping a close watch on six million residents using facial recognition and behavioral analytics. While framed as a security measure, critics argue it could be used to enforce strict Sharia law, raising serious alarms about privacy, human rights, and mass surveillance on an unprecedented scale.

Potential AI Impact!!

✔️ It affects the AI Principles of Privacy, Data Governance and Human Rights

✔️ The Severity classification for AI Breach 2 is of Non-Physical Harm

✔️ The Affected Stakeholders is the General Public

💁 Why is it a Breach?

The use of AI-powered surveillance systems by the Taliban to monitor millions of people raises concerns about potential violations of human rights, particularly regarding privacy and freedom of expression. The system's capabilities, such as facial recognition and categorization by personal attributes, could lead to breaches of fundamental rights under international law.

AI BREACHES (3)

3 - AI, Privacy, and the Law: Investigating X’s Data Practices

The Briefing

Canada’s privacy watchdog is putting social media giant X under the microscope, launching an investigation into how it collects, uses, and shares Canadians' personal data to train AI models. Sparked by a complaint, the probe aims to uncover whether X has crossed the line on federal privacy laws and whether user data is being misused in the name of AI development.

Potential AI Impact!!

✔️ It affects the AI Principles of Privacy, Data Governance

✔️ The Severity classification for AI Breach 3 is of Non-Physical Harm

✔️ The Affected Stakeholders is the General Public

💁 Why is it a Breach?

The investigation into social media platform X is digging into whether its use of personal data for AI training plays by the rules of privacy laws. While no violations have been confirmed, the probe raises red flags about potential privacy breaches—an issue that goes beyond compliance and into human rights territory. At the very least, it’s a clear signal that regulators are keeping a close watch on AI-powered data practices.

AI BREACHES (4)

4 - Microsoft Uncovers Global AI Cyber-crime Network

The Briefing

Microsoft has uncovered a global hacker network, spanning the US and countries like Iran, the UK, Hong Kong, and Vietnam. These individuals bypassed security measures on Azure OpenAI, using stolen credentials to manipulate AI features and create harmful content, such as non-consensual explicit images, which they proceeded to sell.

Potential AI Impact!!

✔️ It affects the AI Principles of Privacy, Digital Security, Data Governance

✔️ The Severity classification for AI Breach 4 is of Non-Physical Harm

✔️ The Harm Types are Psychological and Human Rights

💁 Why is it a Breach?

Hackers bypassed AI safeguards to produce harmful content, including non-consensual intimate images, violating human rights and breaching legal obligations.

AI BREACHES (5)

5 - Robo-Handshake Gone Wrong Where an AI Glitch Sparks Festival Chaos!

The Briefing

At a festival in Tianjin, China, Unitree Robotics' humanoid robot H1 had an unexpected glitch when it malfunctioned and “attacked” a festival-goer who simply extended a hand for a handshake. The incident, likely caused by a programming or sensor error, has sparked concerns about the safety and reliability of AI-powered robots in real-world settings.

Potential AI Impact!!

✔️ It affects the AI Principles of Safety, Digital Security

✔️ The Severity classification for AI Breach 5 is of Injury

✔️ The Harm Types is Physical

💁 Why is it a Breach?

The humanoid robot attacked a person during a festival, which is a malfunction of the AI system leading to harm to a person.

Reply