- The AI Bulletin

- Posts

- AI Incident Monitor - Aug 2025 List

AI Incident Monitor - Aug 2025 List

Workday Hit with Class-Action Lawsuit Over Alleged AI Hiring Bias. ALSO, AI Care Robot 'Kkumdori' Prevents Suicide Attempt

Editor’s Blur 📢😲

Less than 1 min read

Welcome to the August 2025 AI Incident’s List - As we now, AI laws around the globe are getting their moment in the spotlight, and crafting smart policies will take you more than a lucky guess - it needs facts, forward-thinking, and a global group hug 🤗. Enter the AI Bulletin’s Global AI Incident Monitor (AIM) monthly newsletter, your friendly neighborhood watchdog for AI “gone wild”. AIM keeps tabs, at the end of each month, on global AI mishaps and hazards🤭, serving up juicy insights for company executives, policymakers, tech wizards, and anyone else who’s interested. Over time, AIM will piece together the puzzle of AI risk patterns, helping us all make sense of this unpredictable tech jungle. Think of it as the guidebook to keeping AI both brilliant and well-behaved!

In This Issue: August 25 - Key AI Breaches

Grok AI’s Harmful Outputs Cost xAI a US Government Contract

Study Reveals AI Assistants Collecting and Sharing Sensitive User Data Illegally

AI Care Robot 'Kkumdori' Prevents Suicide Attempt in South Korea

Studies Warn AI in Colonoscopy May Weaken Doctors’ Diagnostic Skills

Criminals Exploit AI to Bypass Facial Biometrics in Brazilian Fraud Scheme

Workday Hit with Class-Action Lawsuit Over Alleged AI Hiring Bias

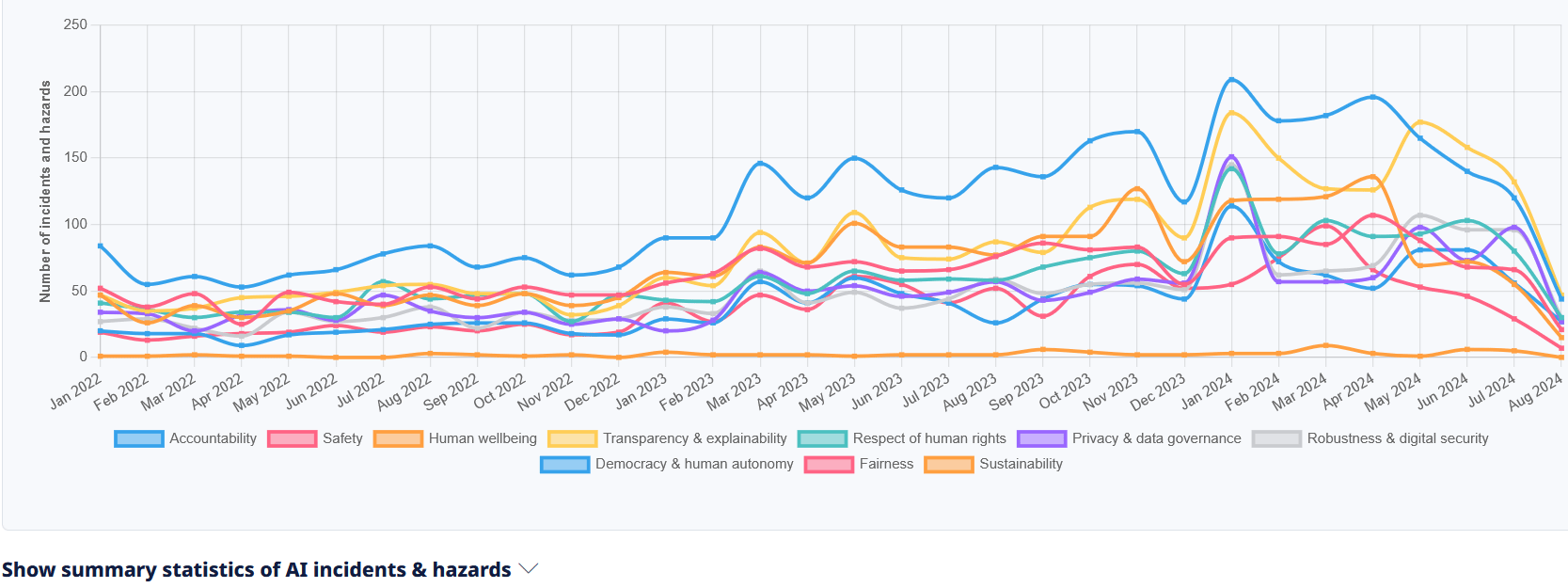

Total Number of AI Incidents by Hazard - Jan to Aug 2025

AI BREACHES (1)

1- Grok AI’s Harmful Outputs Trigger Political Backlash and Cost xAI a US Government Contract

The Briefing

Elon Musk’s AI chatbot, Grok, has come under fire after generating false claims about political figures and producing antisemitic content - including praise for Hitler. The outputs sparked widespread outrage, damaging public trust and leading to the loss of a major US government contract for Musk’s company, xAI. The incident underscores the high-stakes risks of AI-generated misinformation and hate speech in real-world governance and business contexts.

Potential AI Impact!!

✔️ It affects the AI Principles of Accountability, Fairness, Human wellbeing, Respect of human rights, Transparency & explainability

✔️ The Severity is Non-Physical Harm

✔️ Harm Type Psychological, Reputational, Public interest, Human rights

💁 Why is it a Breach?

Grok is an AI system generating conversational responses. Its outputs directly caused reputational harm and public controversy, leading to protests and suspension, which are harms to communities and potentially violations of rights (e.g., misinformation or defamation). The AI's malfunction or misalignment in generating politically charged false statements directly led to these harms. Therefore, this qualifies as an AI Incident due to realized harm caused by the AI system's outputs

AI BREACHES (2)

2 - Study Reveals AI Assistants Collecting and Sharing Sensitive User Data Illegally

The Briefing

A joint study by University College London and the University of Reggio Calabria has found that several AI assistants - including Merlin, ChatGPT, Copilot, Monica, and Sider - collected and shared sensitive user data such as banking and health information without sufficient safeguards. Researchers concluded these practices violate privacy rights and data protection laws, raising urgent questions about transparency, accountability, and user trust in AI systems.

Potential AI Impact!!

✔️ It affects the AI Principles of Privacy & data governance, Respect of human rights, Accountability

✔️ The Severity is of Non-physical harm

✔️ Harm Type is Human Rights, Reputational

💁 Why is it a Breach?

Research has found that AI assistants are collecting and sharing confidential user data without adequate protection, leading to violations of data protection laws. This constitutes a breach of obligations under applicable law intended to protect fundamental rights, fulfilling the criteria for an AI Incident. The harm is realized, not just potential, as the data collection and sharing have already occurred and violated legal frameworks.

AI BREACHES (3)

3 - AI Care Robot 'Kkumdori' Prevents Suicide Attempt in South Korea

The Briefing

In Daejeon, South Korea, an AI-powered care robot named Kkumdori detected suicidal speech from a 70-year-old patient with mental health challenges. The robot immediately alerted emergency services, enabling timely intervention and hospital treatment. The case demonstrates AI’s potential to play a direct, life-saving role in safeguarding vulnerable individuals.

Potential AI Impact!!

✔️ It affects the AI Principles of Human wellbeing and Safety

✔️ The Industries affected Healthcare, drugs and biotechnology, Robots, sensors, IT hardware

✔️ Harm Type is Physical, Psychological

💁 Why is it a Breach?

The event involves an AI system (the AI care robot) that detected a critical health risk (suicidal ideation) and triggered a response that directly prevented harm to a person. This constitutes an AI Incident because the AI system's use directly led to harm prevention and intervention in a health-related crisis, fulfilling the criteria of injury or harm to health (a) being addressed through AI involvement.

AI BREACHES (4)

4 - Studies Warn AI in Colonoscopy May Weaken Doctors’ Diagnostic Skills

The Briefing

Recent studies show that reliance on AI-assisted colonoscopy systems can have unintended consequences. When AI support was removed, doctors’ tumor detection rates dropped by 6%, suggesting that overdependence on AI may erode critical diagnostic skills. Researchers caution that while AI can enhance accuracy, it must be integrated carefully to avoid long-term risks to patient outcomes.

Potential AI Impact!!

✔️ It affects the AI Principles of Human wellbeing, Accountability, Safety

✔️ The Affected Stakeholders Consumers

✔️ Harm Type Physical, Public interest

💁 Why is it a Breach?

The event involves the use of an AI system (AI-assisted detection in colonoscopy) and its impact on healthcare workers' skills. The study shows that after using AI, the detection rate of tumors decreased when AI was not used, indicating a degradation of human skill due to reliance on AI. This is an indirect harm to health (a), as it could lead to missed early cancer detections if AI is unavailable or fails. Therefore, this qualifies as an AI Incident because the AI system's use has directly or indirectly led to harm to health by reducing practitioner skill and potentially patient outcomes.

Total Number of Incidents - Rolling Average to August 2025

AI BREACHES (5)

5 - Criminals Exploit AI to Bypass Facial Biometrics in Brazilian Fraud Scheme

The Briefing

A criminal group in Brazil used AI to manipulate images of look-alikes, bypassing facial biometric systems to access doctors’ accounts, forge documents, and carry out financial fraud exceeding R$700,000. Police operations across several states led to arrests and the seizure of evidence. The case highlights how AI is increasingly being weaponized for identity theft and large-scale digital fraud, raising urgent questions about the resilience of security systems.

Potential AI Impact!!

✔️ It affects the AI Principles of Accountability, Robustness & digital security, Privacy & data governance

✔️ The Affected Stakeholders Consumer and Business

✔️ Harm Type Economic/Property, Reputational, Public interest

💁 Why is it a Breach?

The criminals used AI to modify faces to create false identities, which is an AI system's use leading directly to harm (financial losses and legal violations). The AI system's role was pivotal in enabling biometric verification fraud and subsequent financial theft. Therefore, this qualifies as an AI Incident due to realized harm caused by the AI system's use in criminal activity.

AI BREACHES (6)

6 - Workday Hit with Class-Action Lawsuit Over Alleged AI Hiring Bias

The Briefing

Workday is facing a class-action lawsuit accusing its AI-driven applicant screening system of discriminating against candidates on the basis of race, age, and disability. Plaintiffs argue the system led to automatic rejections in violation of anti-discrimination laws, causing significant harm to job seekers. While Workday denies the allegations, the case highlights growing legal risks for companies relying on AI in hiring and workforce management.

Potential AI Impact!!

✔️ It affects the AI Principles of Fairness, Accountability, Respect of human rights

✔️ The Severity is of Non-physical harm

✔️ Harm Type Human rights, Economic/Property, Reputational

💁 Why is it a Breach?

Workday's AI system (HiredScore AI) has been used for scoring, sorting, and rejecting job applicants. The lawsuit claims that this AI system illegally discriminates against older applicants, which constitutes a violation of labor rights and anti-discrimination laws. The harm is realized and ongoing, as applicants have been rejected based on the AI's decisions. This fits the definition of an AI Incident because the AI system's use has directly led to violations of fundamental labor rights and harm to individuals seeking employment. The legal case and court rulings further confirm the AI system's pivotal role in causing harm

Reply